(Image source: http://arrow.apache.org/)

What if all the best open-source data platforms could easily share, ("ahem,") data with each other?

As data has proliferated and open-source software (OSS) has continued to dominate both the stacks and the business models of the top tech companies in the world, the number of different types of data platforms and tools we've seen emerge has accelerated.

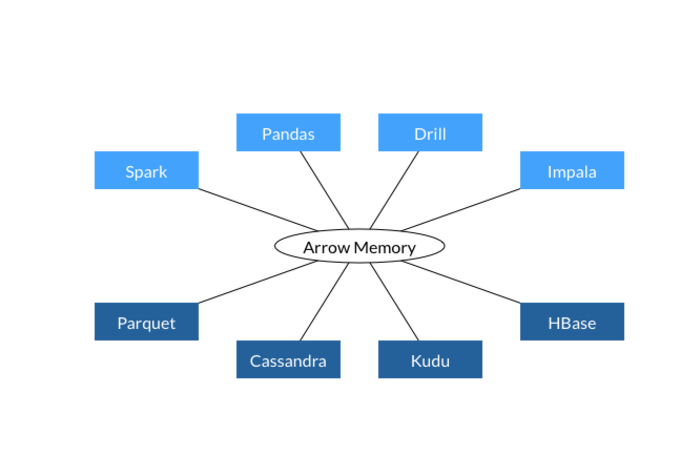

Having a hard time keeping up with the differences between Kudu, Parquet, Cassandra, HBase, Spark, Drill and Impala? You're not alone, and obviously this is one of the reasons we bring together top OSS contributors to these platforms to share at DataEngConf.

But there's one new innovation that attempts to bind all the above projects together by enabling them to share a common memory format. It's a new top level Apache Project called Arrow that aims to dramatically decrease the amount of wasted computation that occurs when serializing and deserializing memory objects. The serialization pattern is commonly used when building analytics applications that interact between data systems which have their own internal memory representations.